3 Problems We Encountered Using @xstate/test With TestCafe — and Their Solutions

«Disclaimer: In this article I do not explain how to use XState or what state charts are. If you are looking for learning material on XState, David Khourshid has put together an up to date list of resources.»

At smartive we always strive to improve our tech stack. That’s why after my colleague Robert Vogt told me about XState and its advantages in early 2019 I almost instantly fell in love with it. XState makes app logic easier to understand by declaratively describing what should happen when events occur and which explicit state the app has afterwards.

We quickly realised that with XState in place we can build higher quality apps which are easier to extend, better to maintain and in the end more scalable.

Since 2019 we have been developing apps for our clients using XState and never turned back to something else. Two examples are subsidia’s payment and stock management app and the Supply Chain App we developed for Migros (case studies in German).

After David Khourshid released his package @xstate/test enabling a model based testing approach we experimented with it on side projects to simplify our testing. @xstate/test takes an already defined state machine and tests along the paths of it, which will (mostly) cover all your use cases and therefore helps you to improve your tests and shortens the time to implement them.

Naturally we wanted to use @xstate/test in the wild and in summer 2020, one of our clients and early adopter of XState subsidia wanted us to test parts of their newly built back office app with @xstate/test. Over time their apps have grown quite a lot and it is possible that with a conventional frontend testing approach they potentially miss a case. Or if they want to test all possible cases it is very time-consuming to write all the tests by hand. That’s why they wanted us to integrate @xstate/test in order to make their lives easier.

The Test Case

Let us assume we needed to build an app to search for people. It should cover the following functionality:

- Initially list 10 people

- If possible load additional 10 people (Pagination)

- Search for specific person (with a debounce to reduce useless API requests)

- Show an error if there was a network problem

- Show a notification if there are no results

- Show button to jump to top if no additional people can be loaded

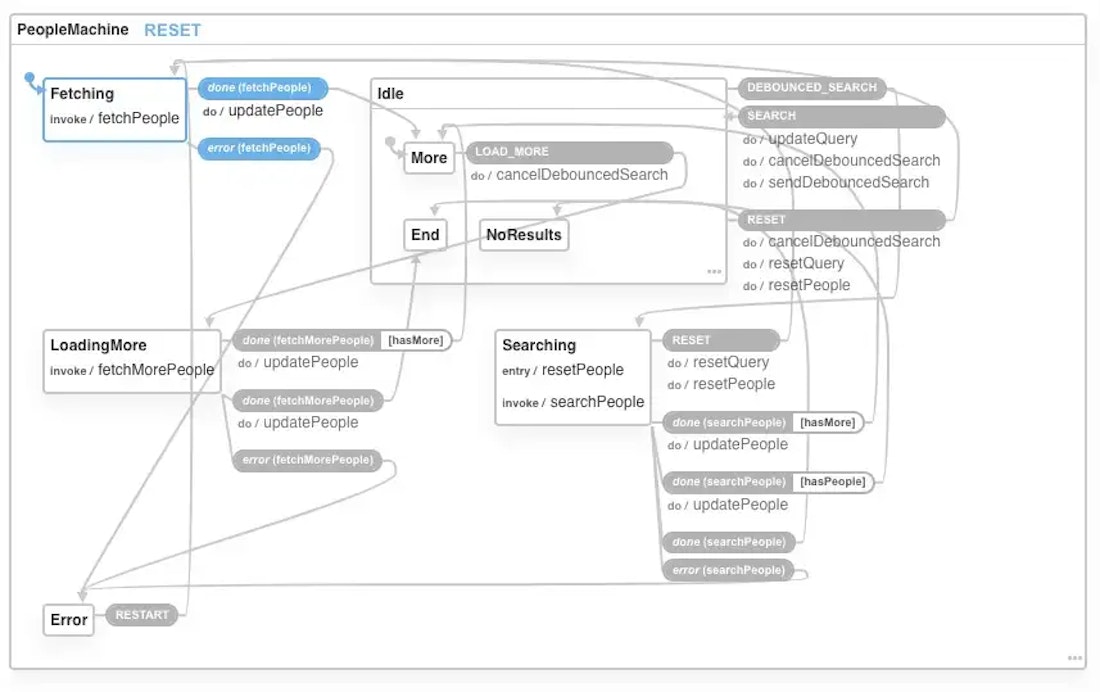

From the given requirements we derived the following state machine:

The Beginning

The @xstate/test Quick Start states that there is the createModel-function which takes any state machine and generates test path plans for it. The created model exposes two functions to generate these plans which can be used to test out your app’s state machine. On one hand there is getSimplePathPlans which will return all simple paths. Simple paths are paths from an initial state to any other state by traversing via any possible other state. On the other hand there is getShortestPathPlans which returns the shortest paths from the initial state to any other state.

For the given case we decided to go with getSimplePathPlans since we like to test every possible state traversal combination. And that's where we encounter our first problem: the guards.

The guards Problem

When using createModel the state machine is executed in the test framework context without invoking any actual services. This leads to a problem, since the services most likely fetch data, which will alter the context and then determines the outcome of a guard. So calling getSimplePathPlans either fails at a specific guard, because no real data is available or the guard returns its default value, which leads to not all paths being generated and in turn not all available paths get tested.

That's why we implemented a guard combination calculator function which takes a guards object and returns an array with all possible combinations of the guards. It actually builds a simple binary table (or a logical or truth table) where each 0 or 1 is a possible result for a given guard function. To make this more comprehensible, let’s take the guards from the people search app:

const guards = {

hasQuery: ({ query }) => query !== null,

hasMore: (_, event) => isPeopleDoneEvent(event) && !!event.data.next,

hasPeople: (_, event) => isPeopleDoneEvent(event) && event.data.count > 0,

}Each of the above guard-functions can either return false or true (0 or 1) and therefore lead to a different path within the state chart. But if there is no data since no actual service gets called during the evaluation of the state machine, it is not possible that for instance query or event.data will ever be defined. That's why we implemented a function which takes the guards object and builds an array with all possible combinations:

const guardCombinations = [

{

hasQuery: () => false,

hasMore: () => false,

hasPeople: () => false,

},

{

hasQuery: () => true,

hasMore: () => false,

hasPeople: () => false,

},

{

hasQuery: () => false,

hasMore: () => true,

hasPeople: () => false,

},

{

hasQuery: () => false,

hasMore: () => false,

hasPeople: () => true,

},

{

hasQuery: () => true,

hasMore: () => true,

hasPeople: () => false,

},

// and so forth...

];Now we can iterate over the array and generate path plans to test for each combination by passing the generated guards into the machine as configs.

const testPlans = guardCombinations.reduce((plans, guards) => [

...plans,

...createModel(Machine(statechartToTest, { guards }))

.withEvents(testEvents)

.getSimplePathPlans(),

], []);Finally we have all our path plans. But how does @xstate/test actually tests them?

The meta Problem

From the @xstate/test Quick Start we know that we need to add a meta-property to each state we like to test. So the people search state chart would look like:

const statechart = {

// ...

id: "PeopleMachine",

initial: "Fetching",

states: {

Fetching: {

// ...

meta: {

test: ({ t }) =>

t.expect(Page.Items.count).eql(0).expect(Page.Loading.exists).ok(),

},

},

Idle: {

// ...

initial: "More",

states: {

More: {

// ...

meta: {

test: ({ t }) =>

t

.expect(Page.Items.count)

.eql(10)

.expect(Page.MoreButton.exists)

.ok(),

},

},

End: {

// ...

meta: {

test: ({ t }) =>

t

.expect(Page.Items.count)

.gt(0)

.expect(Page.MoreButton.exists)

.notOk()

.expect(Page.GoToTopButton.exists)

.ok(),

},

},

NoResults: {

// ...

meta: {

test: ({ t }) =>

t

.expect(Page.Items.count)

.eql(0)

.expect(Page.NoResults.exists)

.ok(),

},

},

},

},

// ...

},

};But we do not like to have test code in our state charts as it will be delivered to the client’s browser. Therefore we would like to extract the meta-code and programmatically append it to the given state chart, so that the test code can be written separately:

const tests: StatesTestFunctions<Context, TestContext> = {

Fetching: ({ t }) =>

t.expect(Page.Items.count).eql(0).expect(Page.Loading.exists).ok(),

Idle: {

More: ({ t }) =>

t.expect(Page.Items.count).eql(10).expect(Page.MoreButton.exists).ok(),

End: ({ t }) =>

t

.expect(Page.Items.count)

.gt(0)

.expect(Page.MoreButton.exists)

.notOk()

.expect(Page.GoToTopButton.exists)

.ok(),

NoResults: ({ t }) =>

t.expect(Page.Items.count).eql(0).expect(Page.NoResults.exists).ok(),

},

// ... additional state tests

};Tim Deschryver wrote a very nice article how he did this in combination with Cypress. We took his approach as a basis, added the above guard combination logic and a logging mechanism and released it as a package called @smartive/xstate-test-toolbox.

This package contains the helper createTestPlans which can be used with xstate and xstate/test.

The createTestPlans function from the package helped us to generate all possible test paths for our state machines:

const tests = {

Fetching: ({ t }) =>

t.expect(Page.Items.count).eql(0).expect(Page.Loading.exists).ok(),

// ...

};

const testEvents = {

/* Will be discussed in the next section. */

};

const testPlans = createTestPlans({ machine, tests, testEvents });

// Iterate over plans and test paths

testPlans.forEach(({ description: plan, paths }) => {

fixture(plan).page("<http://localhost:8080>");

paths.forEach(({ test: run, description: path }) => {

test(`via ${path} ⬏`, (t) => run({ plan, path, t }));

});

});So now we have generated all tests from our state charts and magically everything gets tested — Well, not quite. Since @xstate/test expects us to define what kind of user event or other event does execute a state change, we ran into the next problem.

The async Problem

The idea behind @xstate/test is that we define what should be tested in state X and what kind of action (e.g. a button click) transitions the app into the next state Y. So how do we test invoked services?

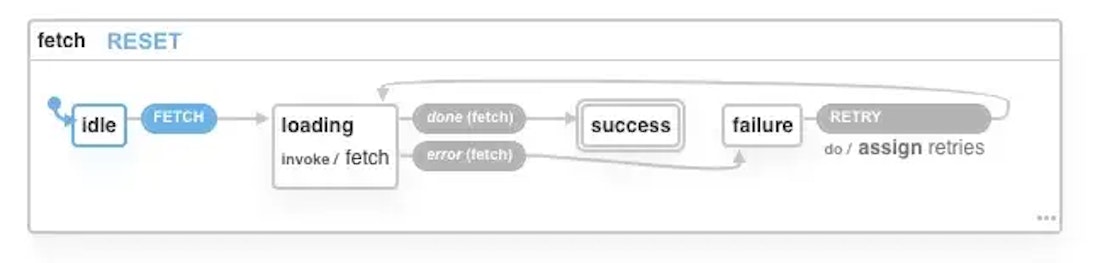

Let’s consider the following state chart as an example:

With the following test code:

const tests = {

idle: ({ t }) =>

t.expect(Selector('[data-test="idle"]').exists).ok(),

loading: ({ t }) =>

t.expect(Selector('[data-test="loading"]').exists).ok(),

success: ({ t }) =>

t.expect(Selector('[data-test="success"]').exists).ok(),

failure: ({ t }) =>

t.expect(Selector('[data-test="failure"]').exists).ok(),

};

const testEvents = {

FETCH: ({ t }) => t.click('[data-test="fetch"]'),

"done.invoke.fetch": /* What action can we trigger here? */,

"error.platform.fetch": /* Or here? */,

};In our initial state idle we test the user interface to show some kind of idle element. To transition from idle to loading we tell @xtate/test to click the element with the selector [data-test="fetch"] to trigger the FETCH event within the app. As we would expect, the loading state invokes the fetch service and would directly transition into success. But that leads to two problems:

- How can we test the

loadingstate deterministically? - What kind of action (e.g. click, type, etc.) do we have to define within our test event

done.invoke.fetchto get the user interface and the underlying state machine fromloadingtosuccess?

The above example is a common use case within apps built with XState. To solve this problem when using TestCafe, we released another package called @smartive/testcafe-utils which contains util classes and functions.

The package contains an interceptor class for XHR-calls, among other functions and classes, which can be initialised like:

const xhrInterceptor = new XHRInterceptor({

fetch: {

method: "GET",

pattern: /.*\\/fetch\\/?.*/,

},

});It then can be injected before our app loads with the help of TestCafe’s clientScripts like test.clientScripts([xhrInterceptor.clientScript()]). Now every call to .../fetch gets intercepted and kept unresolved until xhrInterceptor.resolve is called. This empowers us to define actions for invoke events like the one above. Now we can write:

const testEvents = {

FETCH: ({ t }) => t.click('[data-test="fetch"]'),

"done.invoke.fetch": xhrInterceptor.resolve("fetch"),

"error.platform.fetch": xhrInterceptor.resolve("fetch"),

};The Result

The whole code of the app above can be viewed on GitHub. It also has a workflow in place which successfully runs the generated tests. Additionally you can play around with the app on codesandbox or just view it on vercel.

For those of you who want to see a more “real world” example I forked the cypress-realworld-app repo and added @xstate/test and TestCafe on top of it. You can find it as a pull request to my fork of the app. The pull request is split into two commits: The first contains changes I had to make to improve the testability of the app. (I added comments to explain why the changes are needed.) The second commit contains the actual test code. Feel free to add comments or questions to the pull request.

Conclusion

During the process of writing tests for our customer we solved the guards, meta and async problem. We also encountered that several states didn’t have an appropriate UI representation and couldn’t be tested the way it was implemented. That’s why we needed to add additional user interface elements and partially adjust the state machine. In the end it led to a better, more reliable and stable user experience overall.

If you already use XState we highly recommend to try @xstate/test in combination with our @smartive/xstate-test-toolbox. In case you are using TestCafe as well you are welcome to use @smartive/testcafe-utils to help you with intercepting API calls or mocking time and date related stuff.

What do you use as user interface and/or data state management? How do you test these apps? What do you think about the solution above? We would love to hear about your challenges and how you solved them! Leave us a comment below or let’s get in touch!

Geschrieben von

Dominique Wirz