How we simplified our Kubernetes deployments with an alternative to Helm

This article implies that you know what Kubernetes is and how deployments on Kubernetes work. If you don’t, I recommend reading the two linked posts by Daniel Sanche.

The Beginning

«In the beginning there was the w̶o̶r̶d̶ YAML – and there was a lot of it!»

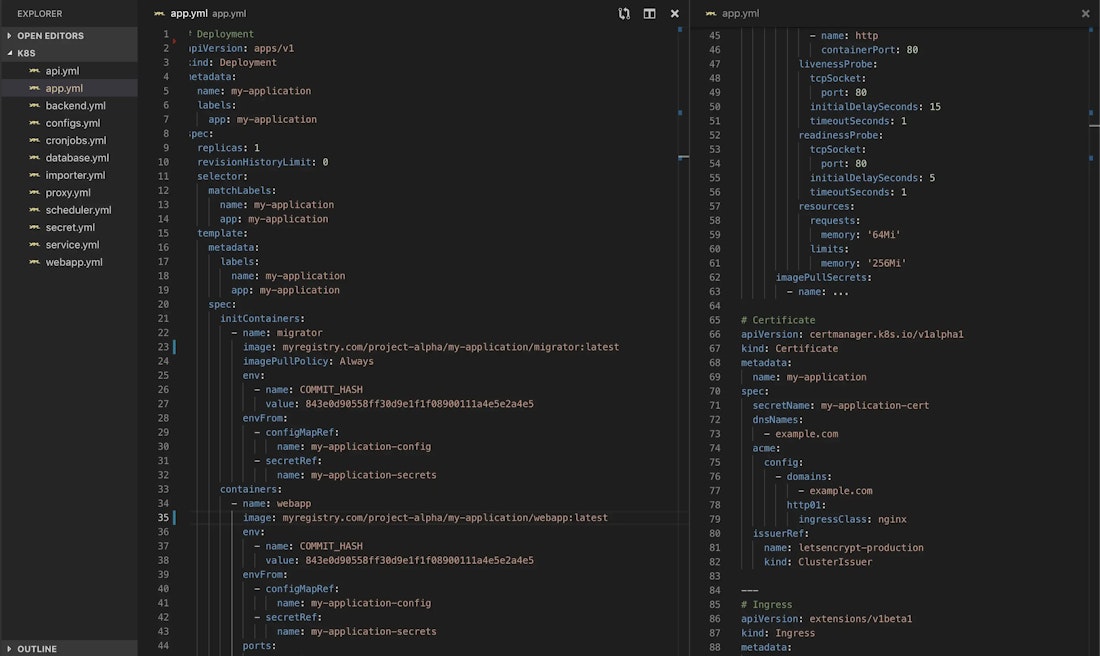

When you want to deploy an application to Kubernetes you have to provide manifests in the form of YAML files that tell Kubernetes how the deployment should look like. As the number of applications and the complexity of your infrastructure increase, you eventually end up with many many lines of YAML. This doesn’t have to be a bad thing, but the files will look pretty similar for most applications (even with different technologies).

If you’ve been in computer science for some time, this repetitive “code” will immediately cause some discomfort. You’d rather avoid repetition — Imagine you wanted to change a simple thing like the name of your deployment. You’d probably end up updating the same metadata.name field in your deployment.yml, service.yml, ingress.yml, certificate.yml, configmap.yml and secret.yml, not even considering you might want to adjust spec.selector.matchLabels.name and spec.template.metadata.labels.name as well.

Our Journey

So we needed a way to reduce the verbosity of our YAML files. As we are using GitLab to host our source code and to build and deploy our applications, we started to put placeholders into our Kubernetes manifests and used the GitLab Variables Feature to substitute values like metadata.namespace or metadata.name. To execute the variable substitution we added some bash scripts which we could run from inside the GitLab pipeline, looking something like this:

stages:

- deploy

deploy:

variables:

K8S_NAMESPACE: staging

script:

- chmod +x ./*

- mkdir -p k8s/deploy

- /bin/bash deploy.sh $CI_PROJECT_NAME $K8S_NAMESPACE

- kubectl apply -f ${CI_PROJECT_DIR}/k8s/deploy#!/usr/bin/env bash

export CI_PROJECT_NAMESPACE=${1}

export CI_PROJECT_NAME=${2}

export K8S_NAMESPACE=${3}

for f in k8s/*.yml

do

envsubst < $filename > "k8s/deploy/$(basename $filename)"

doneThis improved the experience a lot, since it allowed us to change the values of the GitLab Variables which then would be reflected in our running system.

As our usage of placeholders and shell scripts (and the sometimes ugly things we could do with them) were growing, we couldn’t help but notice that all of a sudden we had had multiple voodoo-magic.sh files in our repository which:

- couldn't really be unit tested

- weren’t easy to understand

- looked very similar across our various projects

Similar files… Rings a bell somehow. We immediately wanted to extract those scripts into a place where we could manage them and share them between our different repositories (and no developer would have to look at them).

The (official?) Solution

Since we weren’t the first ones to encounter the problem of Kubernetes YAML files accumulating into a mess, there is actually a product that helps you to conquer it. The smart people of Deis (now acquired by Microsoft) and Google were already working on this issue for quite some time. At some point they decided to work together which resulted in the now very popular Helm package manager. It unites the features of the “Google Cloud Deployment Manager” Kubernetes port and (what they now call) “Helm Classic”.

«Helm helps you manage Kubernetes applications — Helm Charts helps you define, install, and upgrade even the most complex Kubernetes application.- helm.sh website»

Amongst other features Helm, at its core, can manage templates of YAML files (which are called charts) and substitute the placeholders inside those files with the values you provide in a values.yaml file. The second argument in favour of Helm is that many tools like the famous cert-manager already have their own charts which turns installing them into a one liner:

helm install --name my-release stable/cert-manager«Hey that sounds exactly like what you need, why not just use helm?»

Yea, we asked that ourselves, many many times!! So why didn’t we? Why the struggle to come up with our own solution?

One issue with Helm is its potential security vulnerability: Tiller (the server component of Helm) has to run inside your cluster and needs privileged access rights to apply and delete deployments. So if a potential attacker could gain access to Tiller (inside your cluster), he could apply and delete deployments. Worst case: If you run Tiller with cluster-admin permissions to take advantage of its capacity to create and delete namespaces, service-accounts or cluster-role-bindings (which you probably end up needing anyway and many people do), a potential attacker with access to this Tiller instance would be able to delete your deployments and even shut down your entire cluster.

Many of you probably know that there are ways to minimise that risk and some will probably argue that it’s a pretty unlikely scenario anyway, but to us (at that point) it was just not an option. Back then the Helm project wasn’t even part of the CNCF. Now that the team behind Helm is working on version 3 without Tiller and as we’ve learnt that you can use the Helm client without Tiller, the question “why not just use helm?” rises again.

But there’s another argument.

To help our clients choose tools and technologies, we like to know how things work in detail. A good example for this is my first contact with the monitoring toolset Prometheus and Grafana. If we had used Helm, I probably would’ve installed one of the many available Helm charts to monitor our cluster. Since I couldn’t use Helm and therefore assembled all of the needed manifests myself, I claim that I have a much better understanding of the various components that are running on our cluster. This helps me identify problems concerning our cluster and give well-founded advise to our customers when asked about a certain piece of software.

Our Solution

As mentioned before, we were quite happy with the way we could use templates with placeholders but we were still looking for a better way to execute the variable substitution to remove the ugly collection of custom scripts. At the same time we realised that we frequently used similar looking script snippets around the kubectl apply -f ... command inside our gitlab-ci.yml file. This gave us the idea of creating our own command line tool which could provide us with simple commands to manage our deployments.

I’m fortunate enough to work for a company that supports ideas like that and, even better, my colleague Christoph Bühler implements them at lightning speed. So next thing I know, our new interactive CLI deployment tool “kuby” was born. It’s an open source project and you can find it here on GitHub. Kuby wraps kubectl and provides commands like kuby prepare to replace variables in YAML or kuby deploy to prepare and apply deployments to Kubernetes. It’s written in Node.js and uses yargs to parse and handle CLI commands and options. Thanks to Typescript we can finally unit test our deployment “scripts” to make sure everything still works when we make adjustments.

We packed the kuby tool into a docker image and provide multiple images for the different kubectl versions. Using this image, the script to deploy an application with YAML templates to Kubernetes now looks as simple as this:

stages:

- deploy

deploy:

image: smartive/kuby

script:

- kuby --ci deploy

view rawThe command kuby —- ci deploy searches for any *.yml files in a given folder (defaults to ./k8s), replaces every ${MY_VARIABLE} or $MY_VARIABLE with values from environment variables (with envsubst) and executes akubectl apply -f . from inside that folder. In addition to that, this CLI tool supplies us with some neat features like creating namespaces, switching contexts or creating secrets, thus simplifying some daily tasks while working with Kubernetes and speeding up our workflow.

We’re pretty happy this solution as it’s pretty lightweight in comparison to Helm, but we still try to improve other parts of our CI/CD pipeline. Some tasks on our list are optimising the handling of .gitlab-ci.yml files, simplifying the google cloud build configuration and introducing the golden image pattern for our docker images. So maybe there will be a blogpost about one of those topics in the future :-)

Try it yourself

Feel free to check out kuby and see if it can improve your own deployments. Take a look at the readme in our repository to get started and drop a comment if you have any questions.

Geschrieben von

Josh Wirth