Improve Your Full Text Search

How to Tune a Full Text Search Engine Based on Real User Feedback

In this blog post I will show you how we can leverage real world user data to tune the search engine based on a quantitative analysis. I will cover the following questions:

- What are the key metrics used for information retrieval in full-text searches?

- How can we leverage real world user data to improve our full-text search?

- How can we run a quantitative analysis on our full-text search engine?

- How can we make sure that changing our Elasticsearch query does not negatively affect the overall quality of our full-text search engine?

- How can we sustainably improve the quality of our full-text search engine?

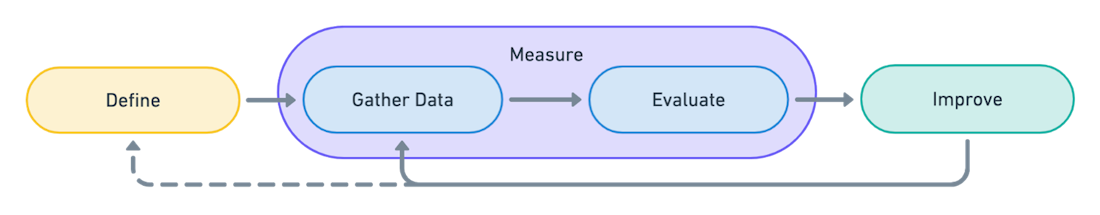

I will dive into defining key metrics, collecting real world user data, running a quantitative analysis on the full-text search, and finally iterating to improve our key metrics.

TL;DR

- Define the goal of the search engine and select matching KPIs

- Log users search term and their result clicks. Consider that log as ground truth, in the believe that the user found its desired result

- Use that log to calculate our search engines KPIs based on the users queries. Our KPIs will be a measure of our search engine’s success.

- Never ever change an Elasticsearch query, index schema or data without reevaluating our KPIs

Define the Key Metrics

Before we start optimization, we must be very clear about the goal we want our search engine to fulfill. What kind of information needs should our search engine cover? Who uses the search engine?

Are our customers interested in a single result (e.g. which movie should I watch next? which sneaker should I buy?) or a list of possible results to get an overview (e.g. in which mails did I write about the upcoming summer party? which documents are about customer X?).

Depending on the use case we can then define our key performance metrics (KPI) to measure the success of our search engine. The common KPIs for information retrieval can be roughly divided into two categories: sensitivity and specificity.

Sensitivity - Measures the success of finding a relevant hit among the first results.

Specificity - Measures the success of finding all relevant records among the first results.

Choose the relevant KPIs depending on the use case and context.

Sensitivity KPIs

We want to present our customer with the best matching result at the top of the search results. In most cases, the customer will leave if the desired result is not listed among the first X results on the search page — or not on the first page of search results.

Hit-Rate@K Percentage for which queries the hit requested by the customer is among the first K results. The higher the better. It's the same as Recall@K (see below) if we only have one relevant result in our database.

MRR (Mean Reciprocal Rank) calculates the average hit position of the hits requested by the customer. The higher the better.

Specificity KPIs

We want to present our customer with a list of possible matches in descending order of match. The ranking is not so important, but all relevant results must be listed.

Recall@K How many of the desired results are listed among the first K results? The higher the better. A Recall@10 of 0.6 means that 3 out of 5 desired results are retrieved within the first 10 results.

DCG Discounted cumulative gain measures how many highly relevant results are among the top results. It penalizes if a highly relevant result is listed at the bottom of the search results.

Even more KPIs

This is just a selection of possible KPIs. Check out this Wikipedia article for additional documentation and even more evaluation measures.

Measure

After we defined our key metrics we can now measure and evaluate our current search engine implementation.

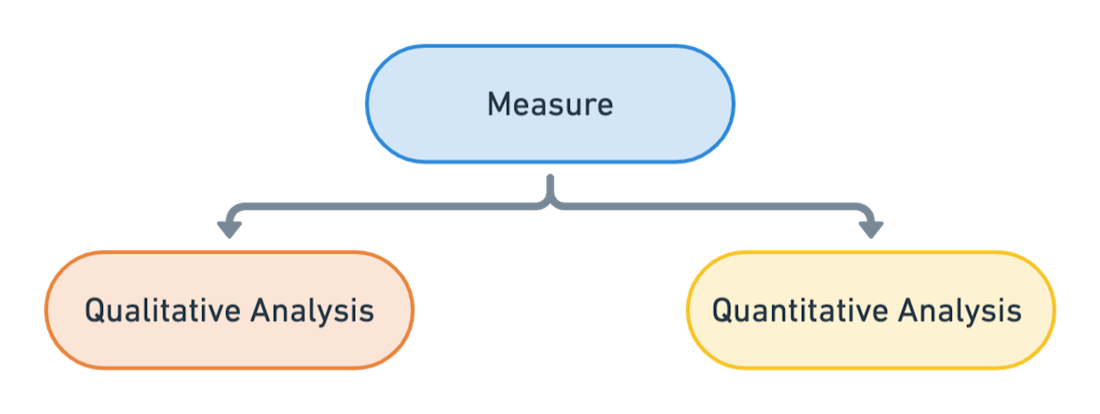

To be able to take measurements we first need some data. There are two options: Qualitative analysis and quantitative analysis.

When we do a qualitative analysis we focus our evaluation on individual use cases and put as much effort as possible into generating the ground truth of our test data. This means we manually define for a set of given query strings all matching documents. This is often tedious work and only covers a very small subset of our search engine’s use cases. So we will do a qualitative analysis only for a selected set of very important use cases.

On the other side the quantitative analysis: The more data we have the better. It must not always be 100% accurate. For a quantitative analysis we can use the log data from users of our search engine.

Gather Data

Collect log data of the search engine in action. Log what the customer searches for and on which result he clicks.

If we make the assumption that the clicked result is the desired best match for the given search query, then we can use the log data to evaluate our search engine. While that’s an assumption that may not be 100% accurate, we’re much better off than relying on our gut or optimizing only for the manager who says the most expensive sneaker should be listed at the top.

To gather the log data we need to implement some tracking on our search results page. As soon as the user clicks on a search result we track the user's search term together with the ID of the search result which was clicked. For further long term analysis it's often useful to also log the rank of the clicked search result (was it the first displayed result or on place 24?). But for our use case we currently only need the search term together with the clicked result. That should get you list of logs like these:

Make sure to collect enough log data. With around 100'000 search terms, result click pairs should do fine. Also take into account seasonal differences in search behaviour, if applicable. The log data should provide a comprehensive picture of our users’ search behaviour.

Evaluate

Now we can feed that data into our search engine and calculate the key metrics for every search term — result click pair.

Take that to our search query. Lets suppose we use a simple match query on the title field of our documents:

GET /_search

{

"query": {

"match": {

"title": "banana"

}

}

}To calculate the Recall@10 KPI scores we can either execute the queries and calculate the scores on our own, or use the Ranking Evaluation API. For that we add each entry of our data log to the requests-array of the ranking evaluation API request. The users search term goes into the requests[].request.query.match query and the clicked document ID is listed as the document within the requests[].ratings array:

POST /my-index/_rank_eval

{

"requests": [

{

"id": "test_query_1",

"request": {

"query": { "match": { "title": "banana" } }

},

"ratings": [

{ "_index": "my-index", "_id": "bananabread-recipe-123", "rating": 1 }

]

},

{

"id": "test_query_2",

"request": {

"query": { "match": { "title": "apple" } }

},

"ratings": [

{ "_index": "my-index", "_id": "apple-pie-531", "rating": 1 }

]

}

],

"metric": {

"recall": {

"k": 10,

"relevant_rating_threshold": 1

}

}

}This will then deliver our final KPI metric score:

{

"rank_eval": {

"metric_score": 0.4,

"details": { ... }

}

}With the integrated Ranking Evaluation API you can easily change the metric to MRR (mean_reciprocal_rank).

Now you're ready to go! The metric_score is the first evaluation of our search engine. This will be our KPI which we will now try to improve.

Improve and Adapt

With the first evaluation of our search engine we have an overview of our status quo quality. We might already see some queries or search combinations which performed bad.

Now it is time to analyze our results. Can we improve our search query? Maybe we need to change our index schema? Maybe we need to improve or enhance our data? Do we still have the right performance metrics to measure the quality of satisfying our customer information needs?

So we have four different ways to improve our search engine:

- Elasticsearch Query

- Index schema

- Indexed Data

- KPI metrics

Adapt the search engine according to the outcomes and make sure to re-run the evaluation after each change. By sticking to clearly defined performance indicators, you can avoid accidentally optimizing past the target and unintentionally degrading the search engine.

Conclusion

Use the users’ search data to measure the success of our search engine. Try to optimize the search engine by iterating over optimizing and measuring. Change the search query, index schema or enhance the document data. Always make sure to recalculate the search engine’s KPIs after each change to avoid accidentally optimizing past the target and unintentionally degrading the search engine.

What else would you like to know? Let us know and get in touch!

Sounds good? But how does it work in practice? Check out the case study on our webpage.

Geschrieben von

Thilo Haas