Digital products in transition - What Google I/O and Microsoft Build reveal about the future

Your users are already talking to the web

Have you noticed how the web is quietly and secretly changing? If you ask a question on Google today, you no longer simply type in "travel destination Thailand" or "best CRM software". Instead, phrases like: "I'm looking for a tropical destination for three weeks in June, ideal for hiking and with good diving opportunities."

And it works - because AI is now reading, thinking and talking. Google reports that search queries are now being AI Mode have become twice as long. Users expect not just results, but real conversations.

Two conferences, one signal

Google I/O and Microsoft Build 2025 have made one thing clear: the big tech platforms are building a new digital reality. Instead of buttons and menus, agents, voice input and system intelligence are taking center stage.

Google is positioning Gemini as an omnipresent assistant - it reads, listens and helps. Microsoft is focusing on an open ecosystem in which websites are not only operated by humans, but also by AI agents. Sounds futuristic? But it is now a reality.

Changed user behavior

What does that mean for you? Your users no longer click, they talk. They scroll less and ask more questions. The classic website with a menu structure is being supplemented - or replaced - by dialog-based interfaces.

According to Google, search queries today are not only twice as long, but also significantly more complex. People formulate their intentions. And expect technology to understand them. This is changing everything - from content strategy to navigation.

Zero-click results, AI overviews and semantic searches show: If your content cannot be understood by AI, it will simply be ignored. Visibility is no longer achieved through good placement, but through good comprehensibility - for people and Machine.

New rules for digital products

AI is no longer just a tool - it is becoming an interface. With Gemini Live, Google analyzes camera and screen content in real time and responds to context rather than just commands. In practice, this means that your users increasingly expect systems to understand them - not just serve them.

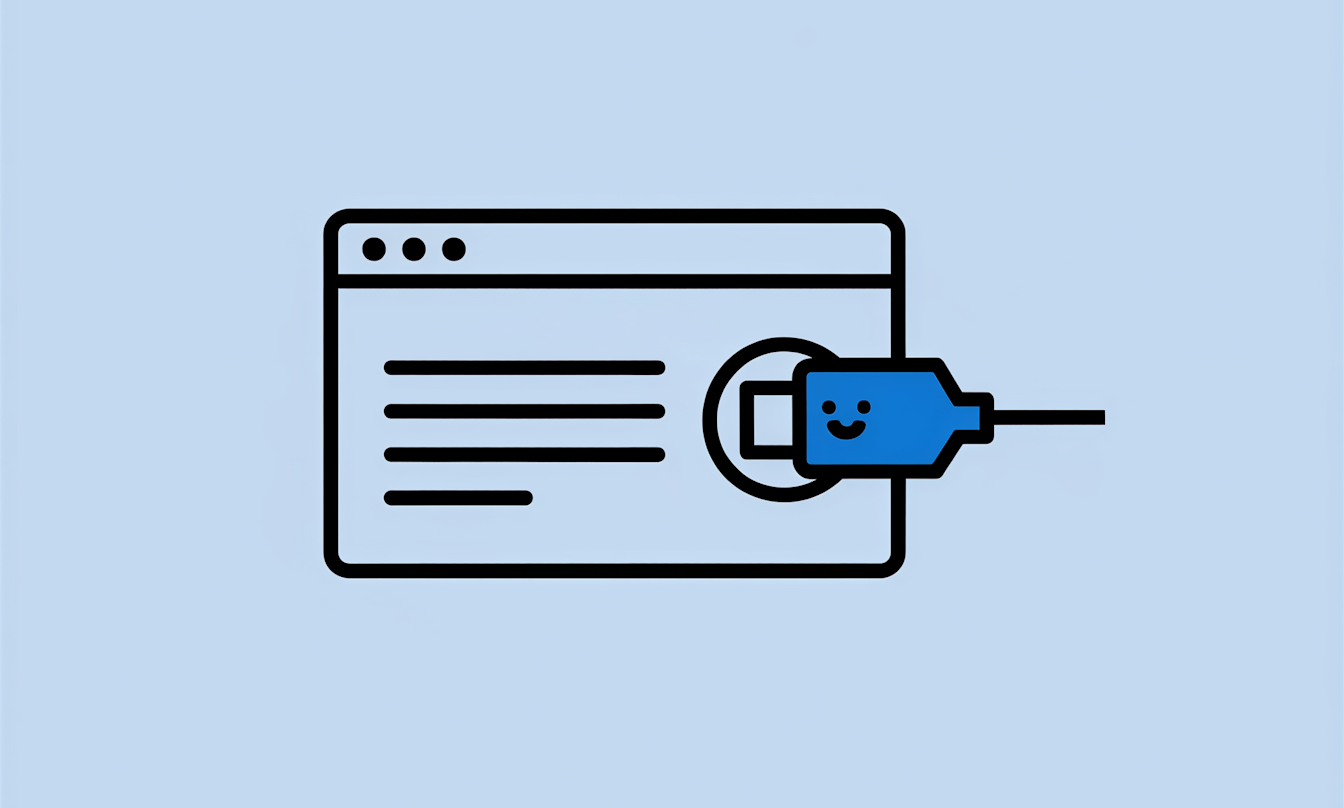

At the same time, a new digital ecosystem is emerging with the "agentic web": websites and services are being designed in such a way that machines - i.e. AI agents - can also interact directly with them. Microsoft's NLWeb protocol is a first building block in this direction. It works like a second language, especially for agents - so that they can reliably retrieve information and carry out actions.

A central technical link in this transformation is the MCP protocol - the Model Context Protocol. Originally developed by Anthropic and now adopted by Google, Microsoft and OpenAI, MCP standardizes how applications pass context to AI. Think of MCP like a USB-C connector for AI applications: a standardized way to connect AI models to data sources and tools.

For the management of access rights and identities, Microsoft has also developed the Entra Agent ID was introduced. This gives AI agents unique, verifiable identities - allowing security and compliance guidelines to be applied in a similar way to human users. This is essential if agents are to operate in sensitive company systems.

And last but not least, the boundaries between language, image, touch and gestures are becoming blurred. Multimodal interaction is becoming the norm: you no longer need to know, where you click - just click, what you want. Your agent will do the rest.

User-centered and agent-capable: how to develop future-proof products

In short, your product no longer just has to work for people - it also has to work for machines.

Build agent endpoints: i.e. interfaces that make your offer understandable for AI. Think in terms of intentions instead of click paths. And develop contexts that remember: what your user asked yesterday should still be relevant tomorrow.

Multimodality is becoming standard. Your application should be able to switch easily between voice, touch and text. And exude confidence: What do you save? What do you pass on? Who can read along? These questions determine acceptance.

Your product is increasingly becoming a conversation partner. Whether this is comfortable or scary depends on how well you manage this change.

SEO & Content 2.0

SEO was yesterday. GEO is today: Generative Engine Optimization. In concrete terms, this means that your content must be built in such a way that AI can understand it, summarize it and pass it on.

This includes semantically structured content, clear relationships between topics, easy-to-read formats such as lists, tables or FAQs. Above all, however, added value counts: AI only recommends what is actually useful. And those who are useful gain reach.

Be clear: in future, your content will not only talk to people, but also to their AI companions. If you want your information to appear in the next response, you should make it AI-friendly.

How to prepare yourself

Do you want to not only keep up with digital products, but also think ahead? Then you need both: an internal realignment and an understanding of how and where your users will be traveling tomorrow.

Here is your 5-step roadmap:

- Set up a use case radar - Where can agents simplify processes or create new experiences? You might find inspiration in this Contribution.

- Rethinking architecture - Which of your APIs are agent-compatible? Where do you need more structured data to make it easier for AI to access?

- Start pilot - Start small: a smart FAQ, an appointment assistant, an interface to Gemini or Copilot. Observe how your users react and learn from it.

- Clarify governance - Who is allowed to do what, with what data? Think about role models for agents, consent mechanisms and data protection.

- Be present on new platforms - Ask yourself: When AI talks to your users in the future - will your brand be part of the conversation? Make yourself discoverable and connectable for Gemini, ChatGPT, Windows Copilot & Co.

The most important question is:

Where will users encounter your product tomorrow - and in what form?

Written by

Mirco Strässle