KubernetesDeployments under control

When your product is ready, it must be started on a computer that makes it available via the Internet (Deployment resp. Release). In the past, this was a server that you set up yourself and operated in your own server room or rented from a provider - today, large companies such as Google or Amazon take care of the hardware for deployment and we use tools such as Kubernetes to check what the setup looks like.

Docker: The same environment everywhere thanks to containers.

Today, many installations can run in parallel on any (large) computer. The demarcation is provided by Container. Container A and B are running on the same computer, but know nothing about each other and have no way of communicating with each other (unless this is desired). This is called Virtualization.

A virtual container can be compared to a shipping container: A standardized form factor (Docker) enables the delivery (deployment) of completely different content (applications) by different transport ships (server hardware).

The best-known technology for container virtualization is Docker. A Docker container abstracts the hardware so that the environment for the software in the container always looks exactly the same - regardless of whether the container is running on the developer's computer or in Google's data center. The result is identical states in different environments and no bugs due to a different version of a component in the operating system.

Infrastructure defined, deployments stable

In the container, web servers such as nginx and runtime environments such as Node.js be installed. Such basic installations are available as reusable Images before. Kubernetes is responsible for automating this setup, starting and stopping containers. This means that the infrastructure can be controlled from A to Z and contains no unknowns. Stable deployments are guaranteed - and Kubernetes provides the leverage to switch on running applications according to requirements.

Highlights

- Fully automated releasesDevelopers do not have to go through a checklist during deployment - this eliminates sources of error and enables short release cycles.

- Uninterrupted operationChanges and bug fixes can be activated without a maintenance window during productive operation - when the new container is ready, it is activated.

- Vertical scalability: If more load is placed on the system, additional resources are switched on according to predefined rules.

- Self-healing mechanisms: If the system detects a problem with a component, it is restarted in a controlled manner. So Christoph can finally sleep through the night again.

Our experience

We have already used Kubernetes in several projects, including:

Monitoring

Good monitoring requires Observability in order to gain an insight into the current state of the system. Kubernetes has mechanisms such as readyness or liveness probes for this purpose. These bundle the data and enable uniform access.

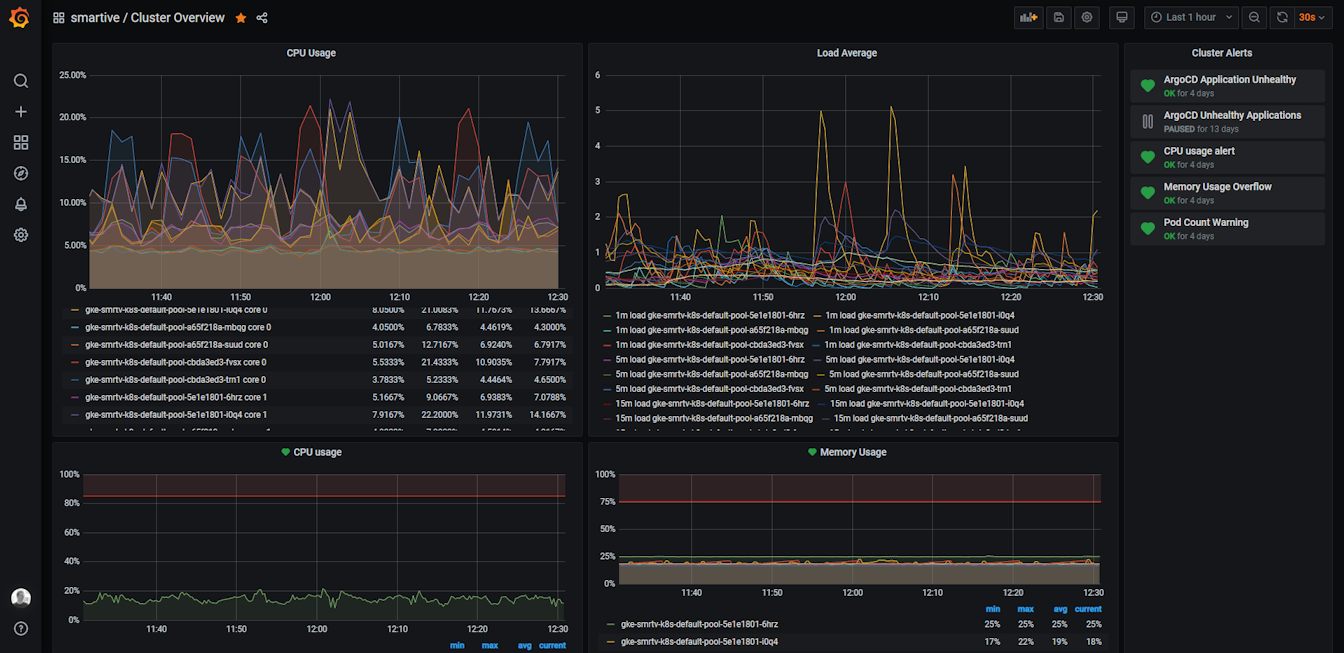

For monitoring, we rely on Metrics Collection with Prometheus and Log Collection with FluentD. The collected data is visualized in a Grafana dashboard. There are also alerts for all common messaging systems.

And susch?

Jargon is the salt in the technical soup. A brief overview. And if you still haven't had enough, we can also recommend our blog post about Kubernetes and Kuby: How we simplified our Kubernetes deployments with an alternative to Helm

The cloud

Instead of deploying to the server room next door, you let Kubernetes and Google Cloud work for you. "The cloud" in this case is Google's data center. Google Kubernetes Engine makes setting up and maintaining a Kubernetes cluster in a Swiss data center a breeze. Of course, other major cloud providers also offer this service.

Continuous Integration & Continuous Delivery (CI/CD)

With every code change, a test pipeline ensures that no errors have crept in. The pipeline merges the code and runs the result as a test (Continuous IntegrationBy keeping release cycles as short as possible, errors are detected at an early stage (Continuous Delivery). The deployment pipeline can be fully automated and guarantees that the code can be released at any time.

Secrets & configuration management

Kubernetes provides mechanisms to configure your application for different environments. With the Kube Sealer we use, it is even possible to store configurations with passwords in a publicly accessible Git repository (see GitOps pattern) - only the designated Kubernetes cluster is able to read the encrypted information.

Desired State

Unlike with classic or virtualized deployments declare For container deployments, we use the so-called desired state. We do not start the deployment ourselves, but delegate it to Kubernetes: "Please start this deployment for me". Kubernetes takes care of the execution and ensures that the desired state is adhered to.

Service Discovery & Load Balancing

Kubernetes ensures that your deployments are accessible at the correct address (service discovery) and that the load is distributed across the replicas with a load balancer if several containers are active.

GitOps pattern

With the GitOps pattern, all Kubernetes configurations (known as manifests) are saved with the source code in a Git repository. Git logs all changes to the system, making them traceable and allowing them to be undone at any time.