When Proposing the Replacement of a Complex Web Application, don’t Forget These Ten Aspects

Your task is analyzing a complex web application and propose a replacement of the whole stack. How do you go about it?

This is the second article in a case study series about a project we at smartive did for a large corporation. Before you as an agency decide to do something similar, be sure to read our first article, 5 things to consider before you replace a complex legacy web application.

With a sufficiently complex organization it’s impossible to learn all the moving parts in advance. That being said, the more you can assess about the application and its environment from the beginning, the more informed your decisions and the less surprises will arise later down the road.

In this article I’ll delineate our analysis of a complex web application, including considerations behind our proposed changes. You might want to take some time to explore these aspects too, when elaborating a replacement proposal.

1. Function and stakeholders

What does the application do? What is the data flow? Who are the stakeholders? What are their use cases? Have the use cases changed? Are there ways the application could solve those cases better, or solve a broader range of use cases?

While these might sound like simple questions, the answers can be very complex, and it might take a lot of time to flesh out all the functions the application performs — and could perform thanks to your improvements. You need to keep in mind this aspect and update it as you proceed in your analysis.

Also keep in mind that one of the reasons you need to know who the stakeholders are is that you will need to help them migrate to the new stack when it is available.

Our case: In short, the application

- imported information about all the various subsidiaries of our client from various sources,

- exposed the data through a REST API, and

- provided various configurable web clients that displayed the subsidiaries on a map, with searching, filtering and detail functionality.

Various stakeholders from in and outside the organization either pulled data directly from the API, or embedded certain variants of the client, configured for their specific needs, into their web pages.

The main function of the application should of course remain the same. Mainly we saw the potential for a more general, cleaner and faster interface (both on the API and on the GUI side).

2. Project structure, versioning and release management

How many repositories does the project have, and how are they organized? What versioning software is used? How are project releases tracked?

Our case: The application was a single SVN repository, containing 3 main folders (importer, logic, web).

In our new solution we opted for separate GIT repositories for different main functions (importer, API, client code), allowing for better parallel work and separate development cycles and releases. We structured the project versions and branches following the GitFlow model.

3. Package and build management

What package manager does the project use? How is build automated? When are tests run?

Our case: The original system was a Maven project.

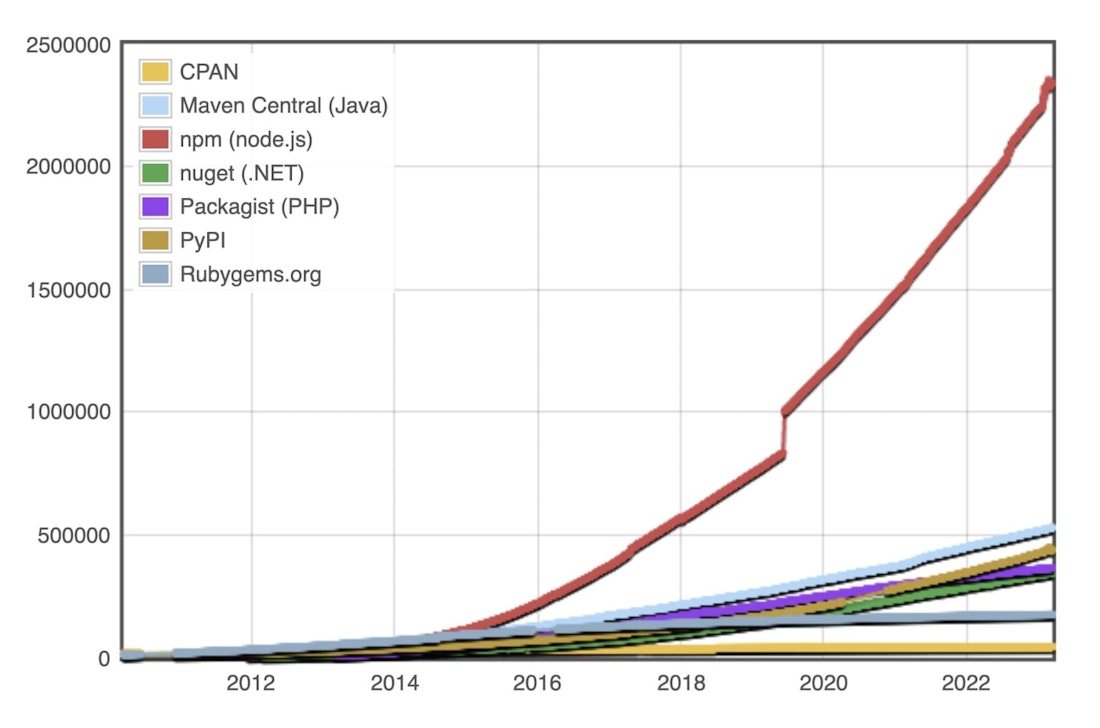

We chose NPM, because it fits our core expertise (see Server technology section on the right), and because its overwhelming success as a package registry.

4. Integration

On which environment is the application deployed? How? When?

Our case: The application had dev, qual and prod environments, deployment on those systems was configured and initiated manually with Jenkins.

We kept a similar 3-tier structure (dev, test, prod), but automated deployment with GitLab’s continuous integration functionality, building on the aforementioned GitFlow model.

- Updating the develop branch triggers a deployment to dev

- Updating any release/… or hotfix/… triggers a deployment to test

- Pushing a new version tag triggers a deployment to prod

5. Server technology

What server technology and frameworks does the application use?

Our case: The application was based on Java Spring, run with Tomcat.

At smartive we build heavily on Node.js, because of its incredibly dynamic ecosystem, and because it allows us to use a single language for the full stack. Our experience is that TypeScript is very well suited for enterprise applications, while still allowing for the agility needed in a modern web agency. To build the API we used Giuseppe, a controller routing system based on annotations built in-house.

6. Client technology

What web frameworks are used to run the client code?

Our case: The client side was heavily based on custom JQuery plugins, including an obscure template system called jquery-tmpl.

Unfortunately, here we weren’t able to pick our own favorite technology (which would currently be either Angular 2 or React). The requirement was that we implement the new client widget as part of a client specific shared components library, built with exoskeleton — still a big improvement compared to the original pure JQuery implementation.

7. External data dependencies

Does the system import data from other sources? With what frequency? What technologies are used? Who is responsible for those interfaces?

Our case: The system imported data from various systems (Oracle Databases, SAP, even Excel, CSV and XML files) using REST, SOAP, and an FTP server. Some of the imports ran daily, some hourly.

Together with the client we found ways to consolidate the data sources and minimize the reliance on FTP. It might not always be possible to do that, as the data sources are mainly outside of your control.

8. Data model

A crucial point. How is the data structured? Is it well-structured / normalized? How does the data structure relate to the actual use cases? How well does it serve them? Are there important differences between how the data is structured in the external source systems, how it is stored in the database, how it is served in the API and how it is actually used by the client(s)?

Through the data model(s) you will get an intuition of what the system was built for, how it has grown over time. Everything you don’t understand about the data model probably suggests a use case you didn’t foresee.

Being able to propose a brand new API is an absolute luxury and should be taken advantage of. There is no better moment to carefully clean up the data structure, remove deprecated fields, provide different “verbosities”, more consistent naming etc.

Our case: The principal object type was a Store, each Store had a Location and multiple Assortments, which themselves offered various types of Services.

Examples of things we were able to clean up with the migration:

- Upon further inspection we discovered that Assortments were a denormalized list containing items of two different types: actual Assortments and something else we then called Markets. Clients had to parse these mixed lists and filter out what they needed. Additionally, these were served as a a key-value map instead of an array, leading to huge problems with multiple Assortments with the same type. We were able to disentangle all of it in our redesign.

- Another aspects were opening hours: in the application both Stores and Markets supported opening hours. In client applications we saw it would be simpler to just have Market opening hours. When we analyzed the data coming from the original source and how it was imported, we discovered that the Store opening hours were an artifact of the importer anyway, who just copied the opening hours of a specific Market. In our redesign, we happily discarded Store opening hours.

This is the main point: it’s really important to understand the data model and how it came to be. Don’t assume it is best structured how it is when the project is handed over to you, rather ask yourself whether it (still) makes sense. These observations can provide significant opportunities to develop a cleaner and simpler application.

9. Data storage

How is data stored and retrieved?

Our case: The application stored the imported data in a MySQL database.

During our analysis we ascertained the following data storage requirements:

- Heavy focus on search, including fuzzy search

- No data writes, except a periodic update of the full data store

- Data is always served with the same redundant structure

- Parts of the data has a flexible structure

Because of the above points we judged that a relational database wasn’t an optimal system to store the subsidiary data. Instead, we opted for ElasticSearch, which allows to store and efficiently search through structured data. Upon each import we create a fully new index (actually 3, one for each supported language).

10. Stack replacement strategy

As we discussed in the previous article, replacing such a big application might take months or even years. You can’t just stop supporting the legacy system while you develop the new one. You need a strategy.

Our case: Roughly speaking, we proceeded as follows.

Setup phase:

- Implement new importer, import data via the API of the legacy system

- Implement new API

- Implement new client based on new API

Migration phase:

- Incrementally replace imports from legacy API with imports from original data sources

- At the same time, in parallel, gradually help stakeholders migrate to new API or to new clients

As soon as all data is imported from original data sources, and all stakeholders have migrated to the new stack, the old stack can be deprecated.

Written by

Nicola Marcacci Rossi