How RAG makes our chatbot the perfect company assistant

Last year, we asked ourselves: How can we make our internal documents even more accessible for our employees? - Of course: a chatbot. But not one that answers questions about life, the universe and all the rest, but one that helps employees with relevant internal information.

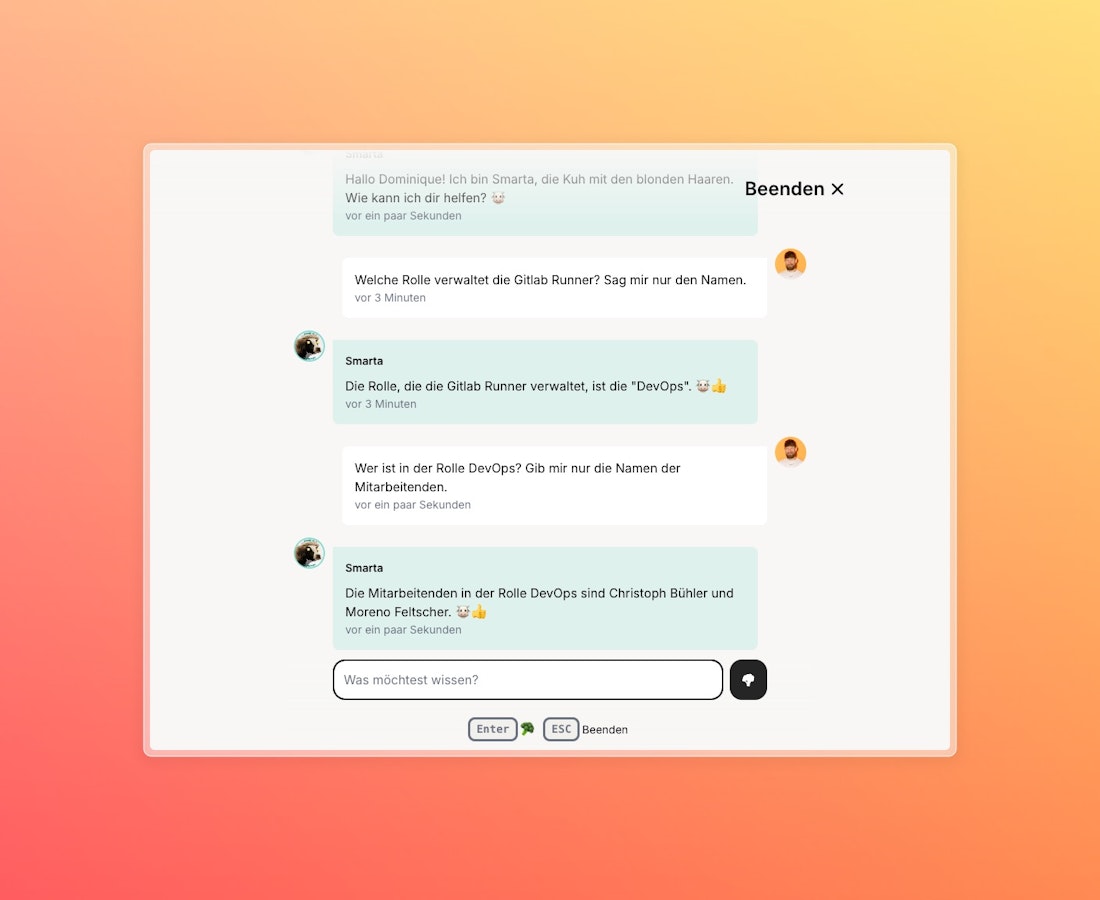

Imagine a digital assistant that knows your company inside out and provides you with exactly the answer you need in a matter of seconds. We have developed just such an assistant.

Last year, we asked ourselves: How can we make internal documents even more accessible for our employees? - Of course: a chatbot. But not one that answers questions about life, the universe and all the rest, but one that helps employees with relevant internal information.

In this blog post, I'll take you behind the scenes and show you what it takes to create a Best Buddy Bot and not a Bad Broken Bot:

- Retrieval Augmented Generation (RAG) forms the bracket. It breaks down each input into its essence, retrieves the information and repackages it.

- The necessary data, saved as vectors

- Vercel AI SDK for the necessary fairy dust

RAG: The key to the intelligence of our chatbot

RAG (Retrieval-Augmented Generation) combines the knowledge database with a language model, searches for relevant information and processes it into a precise, context-related answer.

This is how it works in detail:

- Analyze question: Every time you ask a question, the chatbot first tries to understand what you want to know. With the help of Natural Language Processing (NLP) it breaks down your question into smaller components and grasps the meaning behind it.

- Search infoAfter the chatbot has understood what you want, we search for the appropriate information in a special database. This database does not store texts themselves, but mathematical vectors that contain their meaning. The chatbot then finds the vectors that come closest to your question and retrieves the corresponding text parts.

- Create answer: Now the Large Language Model (LLM) in action. It takes the information it finds and combines it with its own knowledge to generate an answer. The result is an answer that is not only correct, but also tailored to your specific question.

«The great thing about RAG? It not only makes your chatbot smarter, but also more flexible. It can always access the latest information, no matter how the data situation changes.»

Our chatbot's database: how we select the right sources

A chatbot is only as smart as the data available to it. In order for it to provide helpful, precise answers, it needs to access the right data sources. But how do you get meaningful data?

- Create data inventoryBefore we feed our chatbot with information, we first got an overview: Which data sources are already available? Where is there useful information lying dormant that we can make easily accessible?

- Select relevant dataNot everything is useful for the chatbot. Our bot must be able to provide information about internal processes, current projects and technical details. So we have limited ourselves to sources that cover precisely this content.

Our chatbot accesses various data sources: from our website and our internal knowledge management system Notion to PDF documents in Google Drive. This ensures that it always has the most relevant information at its fingertips - no matter where it originally comes from.

From text to vector: how our chatbot processes information

After we have collected the data, we break down the texts with LangChain into manageable chunks. We then use OpenAI Embeddings to transform these text snippets into vectors, i.e. numerical representations that allow the computer to understand the meaning and context.

These embeddings help us to find content with similar meanings, even if the exact words are different. Imagine words and sentences are drawn on a kind of map, similar things close together, very different things far apart: the "pet mountain" with dog and cat at one end, the nerd valley with IPv6, React, "one does not simply push to prod" and Bobby Tables at the other end.

Finally, the vectors end up in a special database from which the chatbot can retrieve the most relevant information at lightning speed.

To keep the "map" up to date, the embedding process in our cloud runs once a day. This process retrieves the information from our data sources and processes it into points on the map.

The boost for our smart app: integration of the Vercel AI SDK

To support the development of the chatbot in our internal Progressive Web App (PWA) as simple and flexible as possible, we rely on the AI SDK from Vercel. The SDK helps us to bring the different technological building blocks together and gives the bot its "Magic".

First and foremost, the SDK standardizes the integration of AI models across different providers so that we don't have to worry about technical details. This gives us more time to focus on the essentials: a great, reliable application.

The SDK also makes it easier to connect tools that talk to our systems. Here is an example of how we register a tool and use the vectorized data we created as part of our RAG approach as part of the response.

import { openai } from "@ai-sdk/openai";

import { convertToCoreMessages, embed, streamText, tool } from "ai";

import { z } from "zod";

export const maxDuration = 30;

export const POST = async (req) => {

const { messages } = await req.json();

const result = await streamText({

model: openai("OPENAI_MODEL"),

messages: convertToCoreMessages(messages),

maxSteps: 2,

system: `

// Smarta's Persona

// ...

Verwende immer das Tool getRelevantDocuments, um passende Dokumente zu einer Anfrage zu finden.`,

tools: {

getRelevantDocuments: tool({

description: "Passende Dokumente zu einer Anfrage finden.",

parameters: z.object({

message: z

.string()

.describe(

"Eingabe zu welcher ein passendes Dokument gefunden werden soll."

),

}),

execute: async ({ message }) => {

const { embedding } = await embed({

model: openai.embedding("OPENAI_EMBEDDINGS_MODEL"),

value: message,

});

// ... Datenbankabfrage

return "Deine Antwort";

},

}),

},

});

return result.toDataStreamResponse();

};With the help of tools, the bot could also answer a question such as "What is the weather like in St. Gallen and Zurich?" by calling up a corresponding tool, requesting the necessary weather data and providing us with a structured answer. We could then display this in the chat with a suitable UI element.

Conclusion: Our chatbot as a smart assistant

Our internal chatbot is more than just a nice gimmick - it has developed into a helpful assistant for our team. With RAG (Retrieval-Augmented Generation), we ensure that all relevant information is found quickly and accurately. We use a combination of NLP, LLMs and a smart vector database to generate answers that are both accurate and up-to-date. The integration of Vercel's AI SDK puts the finishing touches to the chatbot.

The result: Smarta is always at the service of any smartie in need and turns access to knowledge into a simple chat with a buddy who always answers immediately and knows everything.

Written by

Dominique Wirz